DNS cache snooping is a fun technique that involves querying DNS servers to see if they have specific records cached. Using this technique, we can harvest a bunch of information from DNS servers to see which domain names users have recently accessed, possibly revealing some interesting and maybe even embarrassing information. In his nifty paper on the subject, Luis Grangeia explains, "The most effective way to snoop a DNS cache is using iterative queries. One asks the cache for a given resource record of any type (A, MX, CNAME, PTR, etc.) setting the RD (recursion desired) bit in the query to zero. If the response is cached the response will be valid, else the cache will reply with information of another server that can better answer our query, or most commonly, send back the root.hints file contents."

I've always liked that paper, and use the technique all the time in pen tests when my clients have a badly configured DNS server. I've always implemented the technique using a Perl script on Linux. But, last Friday, I was on a hot date with my wife at Barnes & Noble, thinking about that paper in the back of my mind, when it struck me. Heck, I can do that in a single* Windows command. Here it is, using the default of A records:

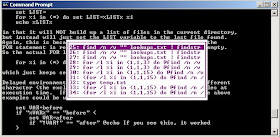

C:\> for /F %i in (names.txt) do @echo %i & nslookup -norecurse %i [DNSserver] | find

"answer" & echo.

This command is built from a FOR /F loop, which iterates over the contents of the file names.txt. In that file, just put all of the names you want to check in the target DNS server's cache, with one name per line. At each iteration through the loop, we turn off command echo (@), display the current name we are checking (echo %i) and then we run nslookup with the -norecurse option. This option will emit queries with the Recursion Desired bit set to zero. Most DNS servers will honor this request, dutifully returning our answer from their cache if they have it, and sending us a list of DNS servers they'd forward to (such as the root name servers) if they don't have it cached. We query against the name we've iterated to (%i) against the chosen DNS server ([DNSserver]). I scrape through the results looking for the string "answer", because if we get back a non-authoritative answer, nslookup's output will say "answer". I added an extra new line (echo.) for readability.

So, if the entry is in the target DNS server's cache, my output will display the given name followed by a line that says "Non-authoritative answer:".

Note that if the target DNS server is authoritative for the given domain name, our output will display only the name itself, but not the "Non-authoritative answer:" text, because the record likely wasn't pulled from the cache but instead was retrieved from the zone files themselves of the target DNS server.

A lot of people discount nslookup in Windows, thinking that it's not very powerful. However, it's got a lot of interesting options for us to play with. And, when incorporated into a FOR /F loop as I've done above, all kinds of useful iterations are possible.

*Well, I use the term "single" here loosely. It's several commands smushed together on a single command line. But, it's not too complex, and it isn't actually that hard to type.

Hal Responds:

Ed, didn't anybody teach you that you should configure your DNS servers to refuse cache queries? Otherwise you could well end up being used as an amplifier in a DNS denial of service attack.

Putting aside Ed's poor configuration choices for the moment, I can replicate Ed's loop in the shell using the "host" command:

$ for i in `cat names.txt`; do host -r $i [nameserver]; done

"-r" is how you turn off recursion with the host command. The "host" command is silent if it doesn't retrieve any information, so I don't need to do any extra parsing to separate out the negative responses like Ed does. In general, "host" is much more useful for scripting kinds of applications than the "dig" command is ("nslookup" has been deprecated on Unix, by the way, so I'm

not going to bother with it here).

If you happen to be on the system where the name server process is running, you can actually get it to dump its cache to a local file:

# rndc dumpdb -cache

The cache is dumped to a file called "named_dump.db" in the current working directory of the name server process. It's just a text file, so you can grep it to your heart's content.

But where is the current working directory of the name server process? Well you could check the value of the "directory" option in named.conf, or just use lsof:

# lsof -a -c named -d cwd

COMMAND PID USER FD TYPE DEVICE SIZE NODE NAME

named 26808 named cwd DIR 253,4 4096 917516 /var/named/chroot/var/run/named

The command line options here mean show the current working directory ("-d cwd") of the named process ("-c named"). The "-a" means to "and" these two conditions together rather than "or"-ing them, which would be the normal default for lsof.

Man, I wish we could do a whole bunch of episodes just on lsof because it's such an amazing and useful command. But, of course, Ed doesn't have lsof on Windows and he'd get all pouty.